Unlocking the Non-deterministic Computing Power with Memory-Elastic Multi-Exit Neural Networks

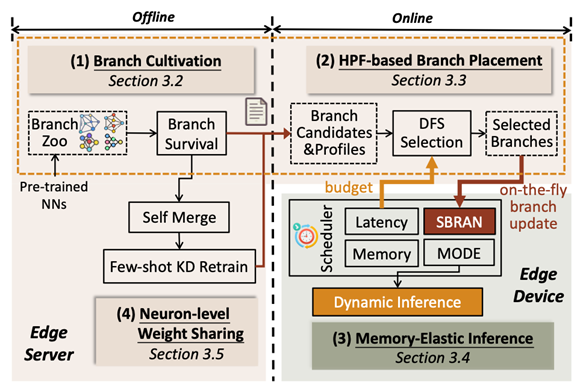

With the increasing demand for Web of Things (WoT) and edge computing, the efficient utilization of limited computing power on edge devices is becoming a crucial challenge. Traditional neural networks(NNs)aswebservicesrelyondeterministiccomputational resources. However, they may fail to output the results on nondeterministic computing power which could be preempted at any time, degrading the task performance significantly. Multi-exit NNs with multiple branches have been proposed as a solution, but the accuracy of intermediate results may be unsatisfactory. In this paper, we propose MEEdge,a system that automatically transforms classic single-exit models into heterogeneous and dynamic multi-exit models which enables Memory-Elastic inference at the Edge with non-deterministic computing power. To build heterogeneous multi-exit models, MEEdge uses efficient convolutions to form a branch zoo and High Priority First (HPF)-based branch placement methodfor branch growth. To adapt models to dynamically varying computational resources, we employ a novel on-device scheduler for collaboration. Further, to reduce the memory overhead caused by dynamic branches, we propose neuron-level weight sharing and few-shot knowledge distillation (KD) retraining. Our experimental results show that models generated by MEEdge can achieve up to 27.31% better performance than existing multi-exit NNs.